이번주 주제는 EKS automation 이다.

우선 실습환경 배포

# YAML 파일 다운로드

curl -O <https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/**eks-oneclick6.yaml**>

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file **eks-oneclick6.yaml** --stack-name **myeks** --parameter-overrides KeyName=**kp-gasida** SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=**AKIA5...** MyIamUserSecretAccessKey=**'CVNa2...'** ClusterBaseName=**myeks** --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name **myeks** --query 'Stacks[*].**Outputs[0]**.OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i **~/.ssh/kp-gasida.pem** ec2-user@$(aws cloudformation describe-stacks --stack-name **myeks** --query 'Stacks[*].Outputs[0].OutputValue' --output text)

# default 네임스페이스 적용

kubectl ns default

# (옵션) context 이름 변경

NICK=<각자 자신의 닉네임>

NICK=gasida

kubectl ctx

kubectl config rename-context admin@myeks.ap-northeast-2.eksctl.io $NICK

# ExternalDNS

MyDomain=<자신의 도메인>

echo "export MyDomain=<자신의 도메인>" >> /etc/profile

*MyDomain=gasida.link*

*echo "export MyDomain=gasida.link" >> /etc/profile*

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

echo $MyDomain, $MyDnzHostedZoneId

curl -s -O <https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml>

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# AWS LB Controller

helm repo add eks <https://aws.github.io/eks-charts>

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \\

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# 노드 IP 확인 및 PrivateIP 변수 지정

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

echo "export N1=$N1" >> /etc/profile

echo "export N2=$N2" >> /etc/profile

echo "export N3=$N3" >> /etc/profile

echo $N1, $N2, $N3

# 노드 보안그룹 ID 확인

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='*ng1*' --query "SecurityGroups[*].[GroupId]" --output text)

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.0/24

# 워커 노드 SSH 접속

for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done

# 사용 리전의 인증서 ARN 확인

CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

echo $CERT_ARN

# repo 추가

helm repo add prometheus-community <https://prometheus-community.github.io/helm-charts>

# 파라미터 파일 생성

cat < monitor-values.yaml

**prometheus**:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

EOT

# 배포

kubectl create ns monitoring

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \\

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \\

-f monitor-values.yaml --namespace monitoring

# Metrics-server 배포

kubectl apply -f <https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml>

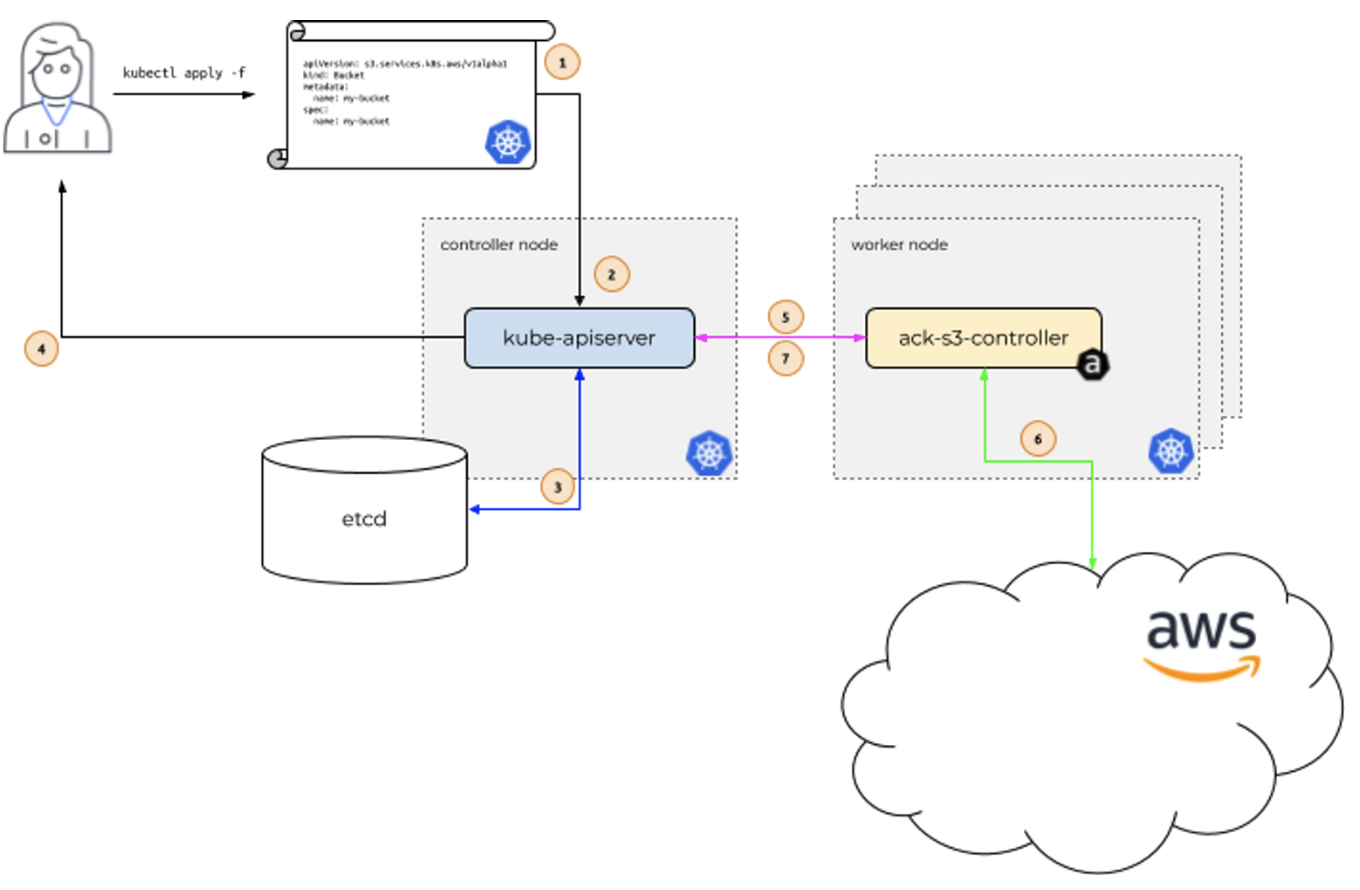

ACK(AWS Controller for K8S)

aws 서비스 리소스를 k8s에서 직접 정의하고 사용할수있다. 즉 쿠버네티스 안에서 aws의 리소스를 생성하고 관리할수있다.

다만 모든 aws 서비스를 지원하지는 않는다.

- Maintenance Phases 관리 단계 : PREVIEW (테스트 단계, 상용 서비스 비권장) , GENERAL AVAILABILITY (상용 서비스 권장), DEPRECATED, NOT SUPPORTED

- GA 서비스 : ApiGatewayV2, CloudTrail, DynamoDB, EC2, ECR, EKS, IAM, KMS, Lambda, MemoryDB, RDS, S3, SageMaker…

- Preview 서비스 : ACM, ElastiCache, EventBridge, MQ, Route 53, SNS, SQS…

S3를 쿠버네티스(with helm)에서 배포하려면 다음과 같이 진행한다.

# 서비스명 변수 지정

**export SERVICE=s3**

# helm 차트 다운로드

~~#aws ecr-public get-login-password --region us-east-1 | helm registry login --username AWS --password-stdin public.ecr.aws~~

export RELEASE_VERSION=$(curl -sL <https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest> | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

tree ~/$SERVICE-chart

# ACK S3 Controller 설치

export ACK_SYSTEM_NAMESPACE=ack-system

export AWS_REGION=ap-northeast-2

helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

helm list --namespace $ACK_SYSTEM_NAMESPACE

kubectl -n ack-system get pods

kubectl get crd | grep $SERVICE

buckets.s3.services.k8s.aws 2022-04-24T13:24:00Z

kubectl get all -n ack-system

kubectl get-all -n ack-system

kubectl describe sa -n ack-system ack-s3-controller

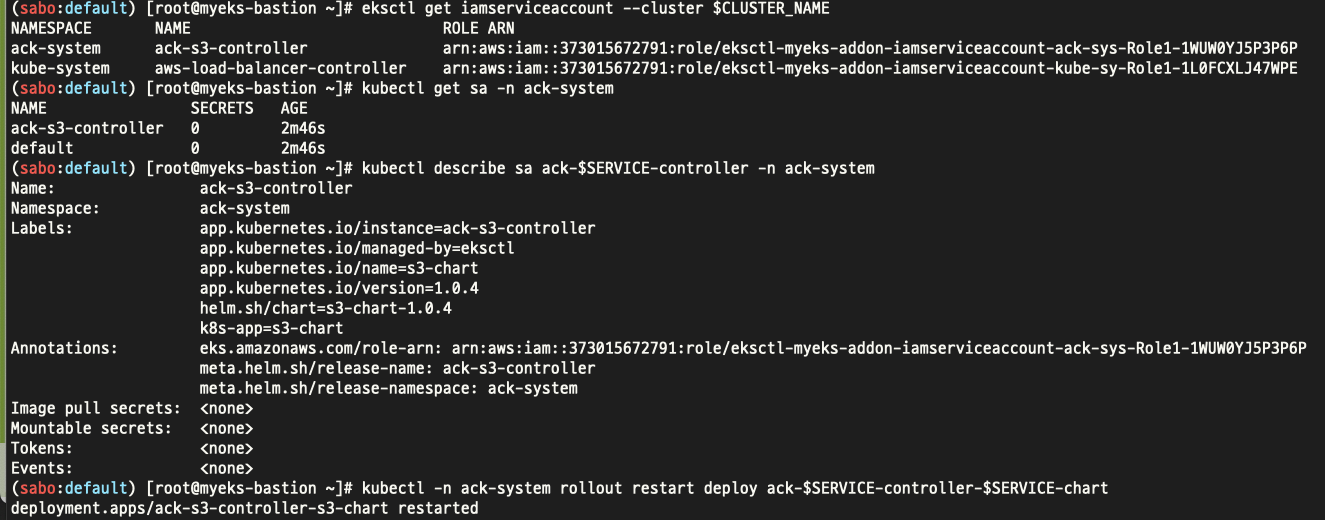

우선 위와같이 ACK S3 컨트롤러를 설치해주고...

위와같이 IRSA를 설정해준다.

이제 S3를 배포해보자.

# [터미널1] 모니터링

watch -d aws s3 ls

# S3 버킷 생성을 위한 설정 파일 생성

export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

export BUCKET_NAME=my-ack-s3-bucket-$AWS_ACCOUNT_ID

read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

EOF

echo "${BUCKET_MANIFEST}" > bucket.yaml

cat bucket.yaml | yh

# S3 버킷 생성

aws s3 ls

**kubectl create -f bucket.yaml**

*bucket.s3.services.k8s.aws/my-ack-s3-bucket-**<my account id>** created*

# S3 버킷 확인

aws s3 ls

**kubectl get buckets**

kubectl describe bucket/$BUCKET_NAME | head -6

Name: my-ack-s3-bucket-**<my account id>**

Namespace: default

Labels: <none>

Annotations: <none>

API Version: s3.services.k8s.aws/v1alpha1

Kind: Bucket

aws s3 ls | grep $BUCKET_NAME

2022-04-24 18:02:07 my-ack-s3-bucket-**<my account id>**

# S3 버킷 업데이트 : 태그 정보 입력

read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

**tagging:

tagSet:

- key: myTagKey

value: myTagValue**

EOF

echo "${BUCKET_MANIFEST}" > bucket.yaml

# S3 버킷 설정 업데이트 실행 : 필요 주석 자동 업뎃 내용이니 무시해도됨!

**kubectl apply -f bucket.yaml**

# S3 버킷 업데이트 확인

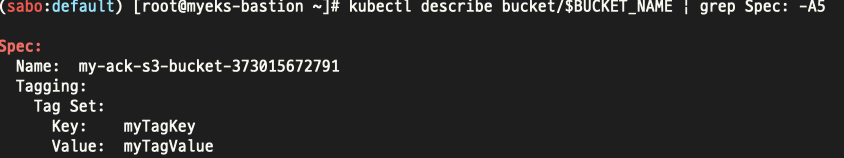

kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5

Spec:

Name: my-ack-s3-bucket-**<my account id>**

Tagging:

Tag Set:

Key: myTagKey

Value: myTagValue

# S3 버킷 삭제

**kubectl delete -f bucket.yaml**

# verify the bucket no longer exists

kubectl get bucket/$BUCKET_NAME

aws s3 ls | grep $BUCKET_NAME

위와같이 손쉽게 S3가 배포된것을 확인할수있다.

이번엔 배포된 s3를 업데이트해보면..

위와같이 태깅이 추가된것을 확인할수있다.

EC2 배포는 다음과 같이 진행한다.(S3 와 거의 동일하다.)

# 서비스명 변수 지정 및 helm 차트 다운로드

**export SERVICE=ec2**

export RELEASE_VERSION=$(curl -sL <https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest> | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

tree ~/$SERVICE-chart

# ACK EC2-Controller 설치

export ACK_SYSTEM_NAMESPACE=ack-system

export AWS_REGION=ap-northeast-2

helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

helm list --namespace $ACK_SYSTEM_NAMESPACE

kubectl -n $ACK_SYSTEM_NAMESPACE get pods -l "app.kubernetes.io/instance=ack-$SERVICE-controller"

**kubectl get crd | grep $SERVICE**

dhcpoptions.ec2.services.k8s.aws 2023-05-30T12:45:13Z

elasticipaddresses.ec2.services.k8s.aws 2023-05-30T12:45:13Z

instances.ec2.services.k8s.aws 2023-05-30T12:45:13Z

internetgateways.ec2.services.k8s.aws 2023-05-30T12:45:13Z

natgateways.ec2.services.k8s.aws 2023-05-30T12:45:13Z

routetables.ec2.services.k8s.aws 2023-05-30T12:45:13Z

securitygroups.ec2.services.k8s.aws 2023-05-30T12:45:13Z

subnets.ec2.services.k8s.aws 2023-05-30T12:45:13Z

transitgateways.ec2.services.k8s.aws 2023-05-30T12:45:13Z

vpcendpoints.ec2.services.k8s.aws 2023-05-30T12:45:13Z

vpcs.ec2.services.k8s.aws 2023-05-30T12:45:13Z

Create an iamserviceaccount - AWS IAM role bound to a Kubernetes service account

eksctl create **iamserviceaccount** \\

--name **ack-**$SERVICE**-controller** \\

--namespace $ACK_SYSTEM_NAMESPACE \\

--cluster $CLUSTER_NAME \\

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`**AmazonEC2FullAccess**`].Arn' --output text) \\

--**override-existing-serviceaccounts** --approve

# 확인 >> 웹 관리 콘솔에서 CloudFormation Stack >> IAM Role 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

# Inspecting the newly created Kubernetes Service Account, we can see the role we want it to assume in our pod.

kubectl get sa -n $ACK_SYSTEM_NAMESPACE

kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

# Restart ACK service controller deployment using the following commands.

**kubectl -n $**ACK_SYSTEM_NAMESPACE **rollout restart deploy ack-$**SERVICE**-controller-$**SERVICE**-chart**

# IRSA 적용으로 Env, Volume 추가 확인

kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chart

...

VPC, Subnet 생성 삭제는 다음과같이 진행한다.

# [터미널1] 모니터링

while true; do aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text; echo "-----"; sleep 1; done

# VPC 생성

cat <<EOF > vpc.yaml

apiVersion: **ec2.services.k8s.aws/v1alpha1**

kind: **VPC**

metadata:

name: **vpc-tutorial-test**

spec:

cidrBlocks:

- **10.0.0.0/16**

enableDNSSupport: true

enableDNSHostnames: true

EOF

**kubectl apply -f vpc.yaml**

*vpc.ec2.services.k8s.aws/vpc-tutorial-test created*

# VPC 생성 확인

kubectl get vpcs

kubectl describe vpcs

**aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text**

# [터미널1] 모니터링

VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID})

while true; do aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text; echo "-----"; sleep 1 ; done

# 서브넷 생성

VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID})

cat <<EOF > subnet.yaml

apiVersion: **ec2**.services.k8s.aws/v1alpha1

kind: **Subnet**

metadata:

name: **subnet-tutorial-test**

spec:

cidrBlock: **10.0.0.0/20**

vpcID: $VPCID

EOF

**kubectl apply -f subnet.yaml**

# 서브넷 생성 확인

kubectl get subnets

kubectl describe subnets

**aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text**

# 리소스 삭제

kubectl delete -f subnet.yaml && kubectl delete -f vpc.yaml

이번에는 VPC 워크플로우를 생성해보고자 한다.

cat <<EOF > vpc-workflow.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **VPC**

metadata:

name: **tutorial-vpc**

spec:

cidrBlocks:

- **10.0.0.0/16**

enableDNSSupport: true

enableDNSHostnames: true

tags:

- key: name

value: vpc-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **InternetGateway**

metadata:

name: **tutorial-igw**

spec:

**vpcRef**:

from:

name: **tutorial-vpc**

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **NATGateway**

metadata:

name: **tutorial-natgateway1**

spec:

**subnetRef**:

from:

name: tutorial-public-subnet1

**allocationRef**:

from:

name: tutorial-eip1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **ElasticIPAddress**

metadata:

name: **tutorial-eip1**

spec:

tags:

- key: name

value: eip-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **RouteTable**

metadata:

name: **tutorial-public-route-table**

spec:

**vpcRef**:

from:

name: tutorial-vpc

**routes**:

- destinationCIDRBlock: 0.0.0.0/0

**gatewayRef**:

from:

name: tutorial-igw

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **RouteTable**

metadata:

name: **tutorial-private-route-table-az1**

spec:

**vpcRef**:

from:

name: tutorial-vpc

routes:

- destinationCIDRBlock: 0.0.0.0/0

**natGatewayRef**:

from:

name: tutorial-natgateway1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **Subnet**

metadata:

name: tutorial-**public**-subnet1

spec:

availabilityZone: **ap-northeast-2a**

cidrBlock: **10.0.0.0/20**

mapPublicIPOnLaunch: true

**vpcRef**:

from:

name: tutorial-vpc

**routeTableRefs**:

- from:

name: tutorial-public-route-table

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **Subnet**

metadata:

name: tutorial-**private**-subnet1

spec:

availabilityZone: **ap-northeast-2a**

cidrBlock: **10.0.128.0/20**

**vpcRef**:

from:

name: tutorial-vpc

**routeTableRefs**:

- from:

name: tutorial-private-route-table-az1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **SecurityGroup**

metadata:

name: tutorial-security-group

spec:

description: "ack security group"

name: tutorial-sg

vpcRef:

from:

name: tutorial-vpc

ingressRules:

- ipProtocol: tcp

fromPort: 22

toPort: 22

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "ingress"

EOF

# VPC 환경 생성

**kubectl apply -f vpc-workflow.yaml**

# [터미널1] NATGW 생성 완료 후 tutorial-private-route-table-az1 라우팅 테이블 ID가 확인되고 그후 tutorial-private-subnet1 서브넷ID가 확인됨 > 5분 정도 시간 소요

**watch -d kubectl get routetables,subnet**

# VPC 환경 생성 확인

kubectl describe vpcs

kubectl describe internetgateways

kubectl describe routetables

kubectl describe natgateways

kubectl describe elasticipaddresses

kubectl describe securitygroups

# 배포 도중 2개의 서브넷 상태 정보 비교 해보자

**kubectl describe subnets

...

Status**:

**Conditions**:

Last Transition Time: 2023-06-04T02:15:25Z

Message: Reference resolution failed

Reason: the referenced resource is not synced yet. resource:RouteTable, namespace:default, name:tutorial-private-route-table-az1

**Status: Unknown**

Type: ACK.ReferencesResolved

**...

Status**:

Ack Resource Metadata:

Arn: arn:aws:ec2:ap-northeast-2:911283464785:subnet/subnet-0f5ae09e5d680030a

Owner Account ID: 911283464785

Region: ap-northeast-2

Available IP Address Count: 4091

**Conditions**:

Last Transition Time: 2023-06-04T02:14:45Z

**Status: True**

Type: ACK.ReferencesResolved

Last Transition Time: 2023-06-04T02:14:45Z

Message: Resource synced successfully

Reason:

**Status: True**

Type: ACK.ResourceSynced

...

참고로 앞서 EC2, VPC등의 실습을 진행해야 VPC워크플로우 생성이 된다. CRD를 미리 생성해줘야 하기 때문이다.

또한 리소스 의존성으로 늦게 생성되는것들이 있어서 좀 기다려야 한다.

이제 퍼블릭 서브넷에 인스턴스를 생성해보자.

# public 서브넷 ID 확인

PUBSUB1=$(kubectl get subnets **tutorial-public-subnet1** -o jsonpath={.status.subnetID})

echo $PUBSUB1

# 보안그룹 ID 확인

TSG=$(kubectl get securitygroups **tutorial-security-group** -o jsonpath={.status.id})

echo $TSG

# Amazon Linux 2 최신 AMI ID 확인

AL2AMI=$(aws ec2 describe-images --owners **amazon** --filters "Name=name,Values=amzn2-ami-hvm-2.0.*-x86_64-gp2" --query 'Images[0].ImageId' --output text)

echo $AL2AMI

# 각자 자신의 SSH 키페어 이름 변수 지정

MYKEYPAIR=<각자 자신의 SSH 키페어 이름>

MYKEYPAIR=kp-gasida

**# 변수 확인 > 특히 서브넷 ID가 확인되었는지 꼭 확인하자!**

echo $PUBSUB1 , $TSG , $AL2AMI , $MYKEYPAIR

# [터미널1] 모니터링

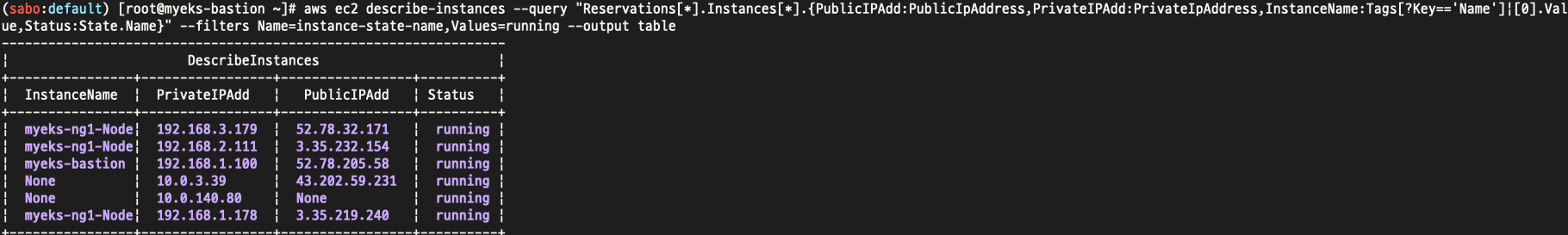

while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table; date ; sleep 1 ; done

# public 서브넷에 인스턴스 생성

cat <<EOF > tutorial-bastion-host.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **Instance**

metadata:

name: **tutorial-bastion-host**

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: **t3.medium**

subnetID: $PUBSUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

**kubectl apply -f tutorial-bastion-host.yaml**

# 인스턴스 생성 확인

**kubectl** get instance

**kubectl** describe instance

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

위와같이 새로운 인스턴스 생성을 확인할수있다.

허나 아직 접속이 되지 않을것이다.

아래처럼 egress 규칙을 추가해야한다.

cat <<EOF > modify-sg.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: SecurityGroup

metadata:

name: tutorial-security-group

spec:

description: "ack security group"

name: tutorial-sg

vpcRef:

from:

name: tutorial-vpc

ingressRules:

- ipProtocol: tcp

fromPort: 22

toPort: 22

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "ingress"

**egressRules:

- ipProtocol: '-1'

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "egress"**

EOF

kubectl apply -f modify-sg.yaml

# 변경 확인 >> 보안그룹에 아웃바운드 규칙 확인

kubectl logs -n $ACK_SYSTEM_NAMESPACE -l k8s-app=ec2-chart -f

이번에는 프라이빗 서브넷에 인스턴스를 생성해보자.

# private 서브넷 ID 확인 >> NATGW 생성 완료 후 RT/SubnetID가 확인되어 다소 시간 필요함

PRISUB1=$(kubectl get subnets **tutorial-private-subnet1** -o jsonpath={.status.subnetID})

**echo $PRISUB1**

**# 변수 확인 > 특히 private 서브넷 ID가 확인되었는지 꼭 확인하자!**

echo $PRISUB1 , $TSG , $AL2AMI , $MYKEYPAIR

# private 서브넷에 인스턴스 생성

cat <<EOF > tutorial-instance-private.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: **Instance**

metadata:

name: **tutorial-instance-private**

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: **t3.medium**

subnetID: $PRISUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

**kubectl apply -f tutorial-instance-private.yaml**

# 인스턴스 생성 확인

**kubectl** get instance

**kubectl** describe instance

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

위와같이 프라이빗 인스턴스도 생성된것이 확인된다.

RDS도 다음과같이 진행하면된다.

# 서비스명 변수 지정 및 helm 차트 다운로드

**export SERVICE=rds**

export RELEASE_VERSION=$(curl -sL <https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest> | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

tree ~/$SERVICE-chart

# ACK EC2-Controller 설치

export ACK_SYSTEM_NAMESPACE=ack-system

export AWS_REGION=ap-northeast-2

helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

helm list --namespace $ACK_SYSTEM_NAMESPACE

kubectl -n $ACK_SYSTEM_NAMESPACE get pods -l "app.kubernetes.io/instance=ack-$SERVICE-controller"

**kubectl get crd | grep $SERVICE**

# Create an iamserviceaccount - AWS IAM role bound to a Kubernetes service account

eksctl create **iamserviceaccount** \\

--name **ack-**$SERVICE**-controller** \\

--namespace $ACK_SYSTEM_NAMESPACE \\

--cluster $CLUSTER_NAME \\

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`**AmazonRDSFullAccess**`].Arn' --output text) \\

--**override-existing-serviceaccounts** --approve

# 확인 >> 웹 관리 콘솔에서 CloudFormation Stack >> IAM Role 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

# Inspecting the newly created Kubernetes Service Account, we can see the role we want it to assume in our pod.

kubectl get sa -n $ACK_SYSTEM_NAMESPACE

kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

# Restart ACK service controller deployment using the following commands.

**kubectl -n $**ACK_SYSTEM_NAMESPACE **rollout restart deploy ack-$**SERVICE**-controller-$**SERVICE**-chart**

# IRSA 적용으로 Env, Volume 추가 확인

kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chart

...

# DB 암호를 위한 secret 생성

RDS_INSTANCE_NAME="<your instance name>"

RDS_INSTANCE_PASSWORD="<your instance password>"

RDS_INSTANCE_NAME=**myrds**

RDS_INSTANCE_PASSWORD=**qwe12345**

kubectl create secret generic "${RDS_INSTANCE_NAME}-password" --from-literal=password="${RDS_INSTANCE_PASSWORD}"

# 확인

kubectl get secret $RDS_INSTANCE_NAME-password

# [터미널1] 모니터링

RDS_INSTANCE_NAME=myrds

watch -d "kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'"

# RDS 배포 생성 : 15분 이내 시간 소요 >> 보안그룹, 서브넷 등 필요한 옵션들은 추가해서 설정해보자!

cat <<EOF > rds-mariadb.yaml

apiVersion: rds.services.k8s.aws/v1alpha1

kind: DBInstance

metadata:

name: "${RDS_INSTANCE_NAME}"

spec:

allocatedStorage: 20

dbInstanceClass: **db.t4g.micro**

dbInstanceIdentifier: "${RDS_INSTANCE_NAME}"

engine: **mariadb**

engineVersion: "**10.6**"

masterUsername: "**admin**"

masterUserPassword:

namespace: default

name: "${RDS_INSTANCE_NAME}-password"

key: password

EOF

kubectl apply -f rds-mariadb.yaml

# 생성 확인

kubectl get dbinstances ${RDS_INSTANCE_NAME}

kubectl describe dbinstance "${RDS_INSTANCE_NAME}"

aws rds describe-db-instances --db-instance-identifier $RDS_INSTANCE_NAME | jq

kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: **creating**

kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: **backing-up**

kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: **available**

# 생성 완료 대기 : for 지정 상태가 완료되면 정상 종료됨

****kubectl wait dbinstances ${RDS_INSTANCE_NAME} **--for=condition=ACK.ResourceSynced** --timeout=15m

*dbinstance.rds.services.k8s.aws/myrds condition met*

이번엔 Flux에 대해 스터디를 진행한다.

설치 방법은 다음과 같다.

# Flux CLI 설치

**curl -s <https://fluxcd.io/install.sh> | sudo bash**

. <(flux completion bash)

# 버전 확인

**flux --version**

flux version 2.0.0-rc.5

# 자신의 Github 토큰과 유저이름 변수 지정

export GITHUB_TOKEN=

export GITHUB_USER=

export GITHUB_TOKEN=ghp_###

export GITHUB_USER=gasida

# Bootstrap

## Creates a git repository **fleet-infra** on your GitHub account.

## Adds Flux component manifests to the repository.

## **Deploys** Flux Components to your Kubernetes Cluster.

## Configures Flux components to track the path /clusters/my-cluster/ in the repository.

**flux bootstrap github \\

--owner=$GITHUB_USER \\

--repository=fleet-infra \\

--branch=main \\

--path=./clusters/my-cluster \\

--personal**

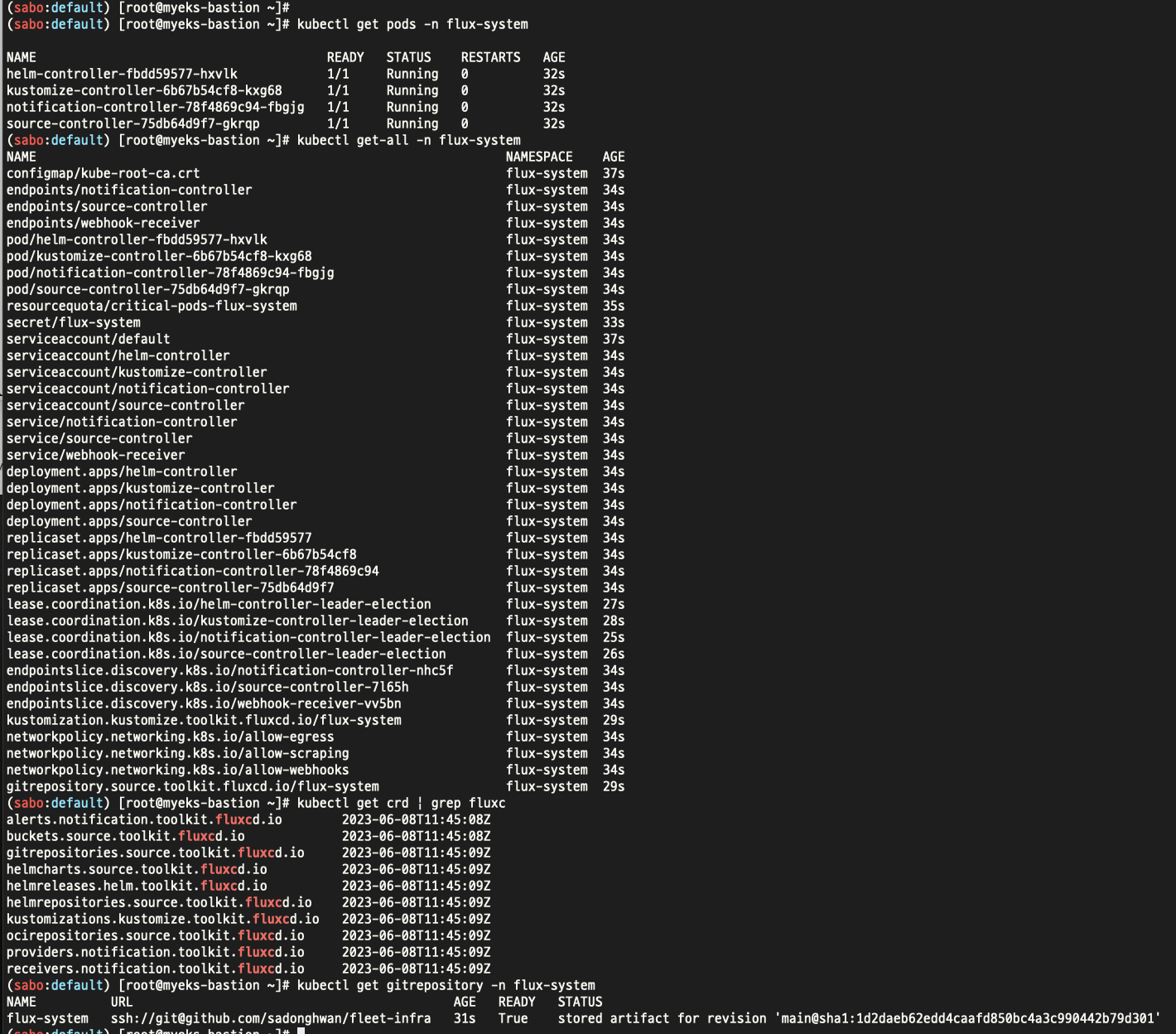

# 설치 확인

kubectl get pods -n flux-system

kubectl get-all -n flux-system

kubectl get crd | grep fluxc

**kubectl get gitrepository -n flux-system**

NAME URL AGE READY STATUS

flux-system ssh://git@github.com/gasida/fleet-infra 4m6s True stored artifact for revision 'main@sha1:4172548433a9f4e089758c3512b0b24d289e9702'

위와같이 설치 진행

Gitops 도구 설치는 아래와같이 진행한다.

# gitops 도구 설치

curl --silent --location "<https://github.com/weaveworks/weave-gitops/releases/download/v0.24.0/gitops-$(uname)-$>(uname -m).tar.gz" | tar xz -C /tmp

sudo mv /tmp/gitops /usr/local/bin

gitops version

# flux 대시보드 설치

PASSWORD="password"

gitops create dashboard ww-gitops --password=$PASSWORD

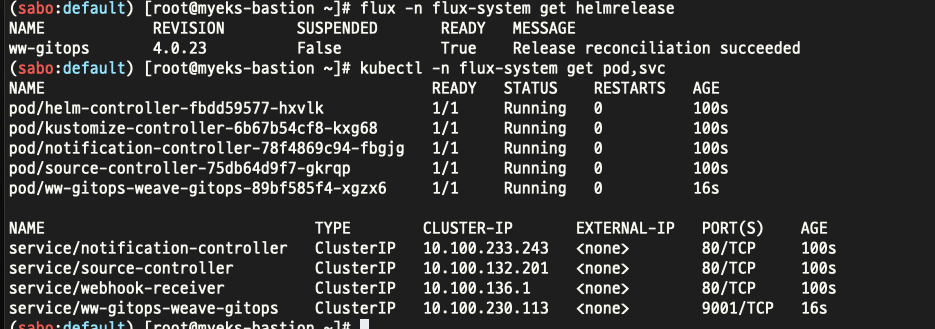

# 확인

flux -n flux-system get helmrelease

kubectl -n flux-system get pod,svc

이후 ingress 를 설정해준다.

CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

echo $CERT_ARN

# Ingress 설정

cat <<EOT > gitops-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: gitops-ingress

annotations:

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/group.name: **study**

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- host: gitops.$MyDomain

http:

paths:

- backend:

service:

name: ww-gitops-weave-gitops

port:

number: 9001

path: /

pathType: Prefix

EOT

kubectl apply -f gitops-ingress.yaml -n flux-system

# 배포 확인

kubectl get ingress -n flux-system

# GitOps 접속 정보 확인 >> 웹 접속 후 정보 확인

echo -e "GitOps Web <https://gitops.$MyDomain>"

헬로월드 예제 소스 코드이다.

# 소스 생성 : 유형 - git, helm, oci, bucket

# flux create source {소스 유형}

# 악분(최성욱)님이 준비한 repo로 git 소스 생성

GITURL="<https://github.com/sungwook-practice/fluxcd-test.git>"

**flux create source git nginx-example1 --url=$GITURL --branch=main --interval=30s**

# 소스 확인

flux get sources git

kubectl -n flux-system get gitrepositories

이후 flux 애플리케이션 생성은 다음과같이 진행한다.

# [터미널] 모니터링

watch -d kubectl get pod,svc nginx-example1

# flux 애플리케이션 생성 : nginx-example1

flux create **kustomization** **nginx-example1** --target-namespace=default --interval=1m --source=nginx-example1 --path="**./nginx**" --health-check-timeout=2m

# 확인

kubectl get pod,svc nginx-example1

kubectl get kustomizations -n flux-system

flux get kustomizations

설치 완료 및 확인

'job > eks' 카테고리의 다른 글

| eks 6주차 (0) | 2023.05.31 |

|---|---|

| eks 5주차 (0) | 2023.05.23 |

| 4주차 eks 스터디 (0) | 2023.05.16 |

| 3주차 eks 스터디 (0) | 2023.05.13 |

| eks 2주차 (0) | 2023.05.02 |